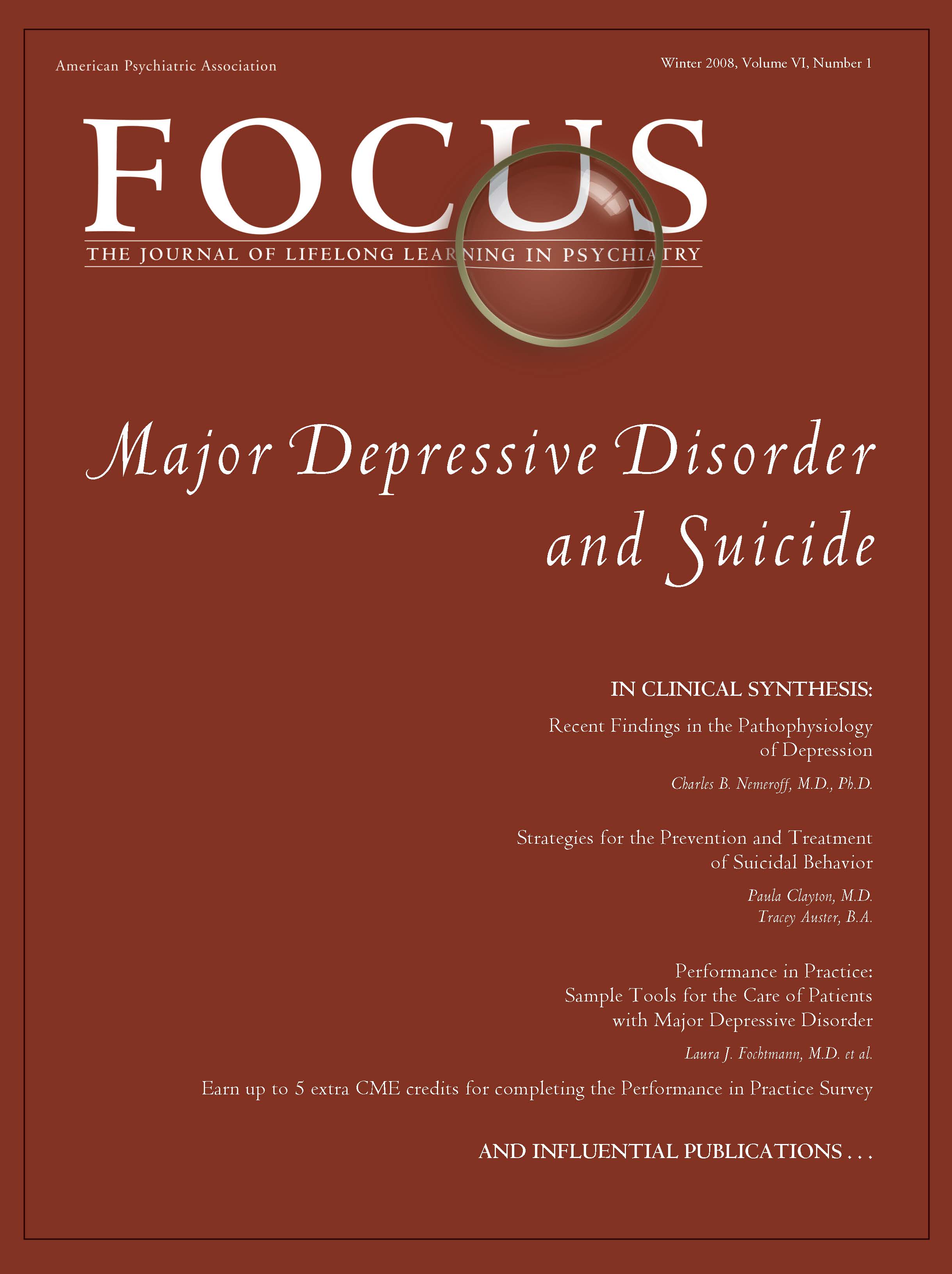

Performance in Practice: Sample Tools for the Care of Patients with Major Depressive Disorder

Abstract

To facilitate continued clinical competence, the American Board of Medical Specialties and the American Board of Psychiatry and Neurology are implementing multi-faceted Maintenance of Certification programs, which include requirements for self-assessments of practice. Because psychiatrists may want to gain experience with self-assessment, two sample performance-in-practice tools are presented that are based on recommendations of the American Psychiatric Association's Practice Guideline for the Treatment of Patients with Major Depressive Disorder. One of these sample tools provides a traditional chart review approach to assessing care; the other sample tool presents a novel approach to real-time evaluation of practice. Both tools can be used as a foundation for subsequent performance improvement initiatives that are aimed at enhancing outcomes for patients with major depressive disorder.

Psychiatrists, like other medical professionals, are confronted by a need to maintain specialty specific knowledge despite an explosion in the amount of new information and the ongoing demands of clinical practice. Given these challenges, it is not surprising that researchers have consistently found gaps between actual care and recommended best-practices (1–10). In attempting to enhance the quality of delivered care, a number of approaches have been tried with varying degrees of success. Didactic approaches, including dissemination of written educational materials or practice guidelines, produce limited behavioral change (11–19). Embedding of patient-specific reminders into routine care can lead to benefits in specific quality measures (11, 13–16, 20–23) but these improvements may be narrow in scope, limited to the period of intervention or unassociated with improved patient outcomes (24–27). Receiving feedback after self or peer-review of practice patterns may also produce some enhancements in care (13–15, 23, 28–30). Given the limited effects of the above approaches when implemented alone, the diverse practice styles of physicians and the multiplicity of contexts in which care is delivered, a combination of quality improvement approaches may be needed to improve patient outcomes (14, 19, 28, 29, 31–34).

With these factors in mind, the American Board of Medical Specialties and the American Board of Psychiatry and Neurology are implementing multi-faceted Maintenance of Certification (MOC) Programs that include requirements for self-assessments of practice through reviewing the care of at least 5 patients (35). As with the original impetus to create specialty board certification, the MOC programs are intended to enhance quality of patient care in addition to assessing and verifying the competence of medical practitioners over time (36, 37). Although the MOC phase-in schedule will not require completion of a Performance in Practice (PIP) unit until 2014 (35), individuals may wish to begin assessing their own practice patterns before that time. To facilitate such self-assessment related to the treatment of depression, this paper will discuss several approaches to reviewing one's clinical practice and will provide sample PIP tools that are based on recommendations of the American Psychiatric Association's Practice Guideline for the Treatment of Patients with Major Depressive Disorder (38).

Traditionally, most quality improvement programs have focused on retrospective assessments of practice at the level of organizations or departments (39). The Healthcare Effectiveness Data and Information Set (HEDIS) measures of the National Committee for Quality Assurance (NCQA) (40) are a commonly used group of quality indicators that measure health organization performance. When used under such circumstances, quality indicators are typically expressed as a percentage that reflects the extent of adherence to a particular indicator. For example, in the quality of care measures for bipolar disorder (41) derived from the American Psychiatric Association's 2002 Practice Guideline for the Treatment of Patients with Bipolar Disorder (42), one of the indicators is that “Patients in an acute depressive episode of bipolar disorder who are treated with antidepressants, [are] also receiving an antimanic agent such as valproate or lithium.” In this example, to calculate the percentage of patients for whom the indicator is fulfilled, the numerator will be the “Number of patients in an acute depressive episode of bipolar disorder, who are receiving an antidepressant, and who are also receiving an anti-manic agent such as valproate or lithium.” and the denominator will be the “Number of patients in an acute depressive episode of bipolar disorder who are receiving an antidepressant” (41).

As in the above example, most quality indicators are derived from evidence-based practice guidelines, which are intended to apply to typical patients in a population rather than being universally applicable to all patients with a particular disorder (43, 44). In addition, practice guideline recommendations are mainly informed by data from randomized controlled trials. Patients in such trials may have significant differences from those seen in routine clinical practice (45), including clinical presentation, preference for treatment, response to treatment, and presence of co-occurring psychiatric and general medical conditions (43, 46, 47). These differences may result in treatment decisions for individual patients that are clinically appropriate but not concordant with practice guideline recommendations.

When quality indicators are used to compare individual physicians' practice patterns, quality measures can be influenced by practice size, patients' sociodemographic factors and illness severity as well as other practice-level and patient-level factors. For example, when small groups of patients are receiving care from an individual physician, a small shift in the number of individuals receiving a recommended intervention could lead to large shifts in the resulting rates of concordance with evidence-based care. Without appropriate application of case-mix adjustments, across-practice comparisons may result in erroneous conclusions about the quality of care being delivered (48, 49). For patients with complex conditions or multiple disorders receiving simultaneous treatment, composite measures of overall treatment quality may yield more accurate appraisals than measurement of single quality indicators (50–52).

With the above caveats, however, use of retrospective quality indicators can be beneficial for individual physicians who wish to assess their own patterns of practice. If a physician's self-assessment identified aspects of care that frequently differed from key quality indicators, further examination of practice patterns would be helpful. Through self-assessment, the physician may determine that deviations from the quality indicators are justified, or he may acquire new knowledge and modify practice to improve quality. It is this sort of self-assessment and performance improvement efforts that the MOC PIP program is designed to foster.

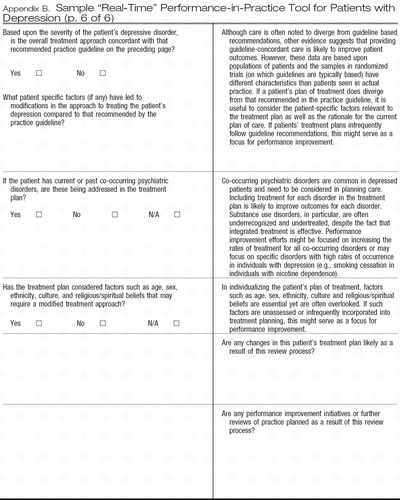

Appendices A and B provide sample PIP tools, each of which is designed to be relevant across clinical settings (e.g., inpatient, outpatient), straightforward to complete and usable in a pen-and-paper format to aid adoption. Although the MOC program requires review of at least 5 patients as part of each PIP unit, it is important to note that larger samples will provide more accurate estimates of quality within a practice. Appendix A provides a sample retrospective chart review PIP tool that assesses the care given to patients with major depressive disorder. Although it is designed as a self-assessment tool, this form could also be used for retrospective peer-review initiatives. As with other retrospective chart review tools, some questions on the form relate to the initial assessment and treatment of the patient whereas other questions relate to subsequent care. Appendix B provides a prospective review form that is intended to be a cross-sectional assessment and could be completed immediately following a patient visit. As currently formatted, Appendix B is designed to be folded in half to allow real-time feedback based upon answers to the initial practice-based questions. This approach is more typical of clinical decision support systems that provide real-time feedback on the concordance between guideline recommendations and the individual patient's care. In the future, the same data recording and feedback steps could be implemented via a web-based or electronic record system enhancing integration into clinical workflow (53). This will make it more likely that psychiatrists will see the feedback as interactive, targeted to their needs and clinically relevant. Rather than relying on more global changes in practice patterns to enhance individual patients' care, such feedback also provides the opportunity to adjust the treatment plan of an individual patient to improve patient-specific outcomes (54–56). However, data from this form could also be used in aggregate to plan and implement broader quality improvement initiatives. For example, if self-assessment using the sample tools suggests that signs and symptoms of depression are inconsistently assessed, consistent use of more formal rating scales such as the PHQ-9 (57–59) could be considered.

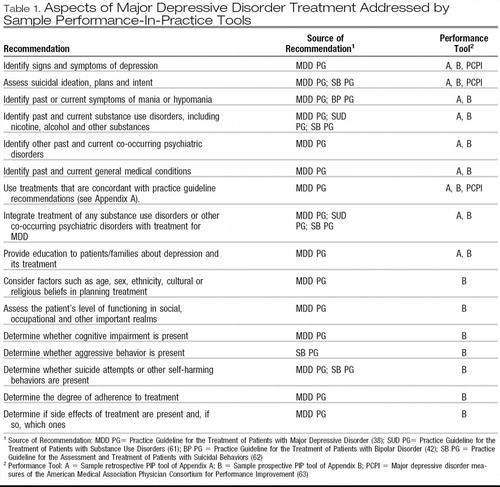

Each of the sample tools attempts to highlight aspects of care that have significant public health implications (e.g., suicide, obesity, use of tobacco and other substances) or for which gaps in guideline adherence are common. Examples include underdetection and undertreatment of co-occuring substance use disorders (5) and the relatively low concordance with practice guideline recommendations for use of psychosocial therapies and for treatment of psychotic features with MDD (4). Table 1 summarizes specific aspects of care that are measured by these sample PIP tools. Quality improvement suggestions that arise from completion of these sample tools are intended to be within the control of individual psychiatrists rather than dependent upon other health care system resources.

|

Table 1. Aspects of Major Depressive Disorder Treatment Addressed by Sample Performance-In-Practice Tools

After using one of the sample PIP tools to assess the pattern of care given to a group of 5 or more patients with major depressive disorder, the psychiatrist should determine whether specific aspects of care need to be improved. For example, if the presence or absence of co-occurring psychiatric disorders has not been assessed or if these disorders are present but not addressed in the treatment plan, then a possible area for improvement would involve greater consideration of co-occurring psychiatric disorders, which are common in patients with MDD.

These sample PIP tools can also serve as a foundation for more elaborate approaches to improving psychiatric practice as part of the MOC program. If systems are developed so that practice-related data can be entered electronically (either as part of an electronic health record or as an independent web-based application), algorithms can suggest areas for possible improvement using specific, measurable, achievable, relevant and time-limited objectives (60). Such electronic systems could also provide links to journal or textbook materials, clinical practice guidelines, patient educational materials, drug-drug interaction checking, evidence based tool kits or other clinical materials. In addition, future work will focus on developing more standardized approaches to integrating patient and peer feedback with personal performance review, developing and implementing programs of performance improvements and reassessment of performance and patient outcomes.

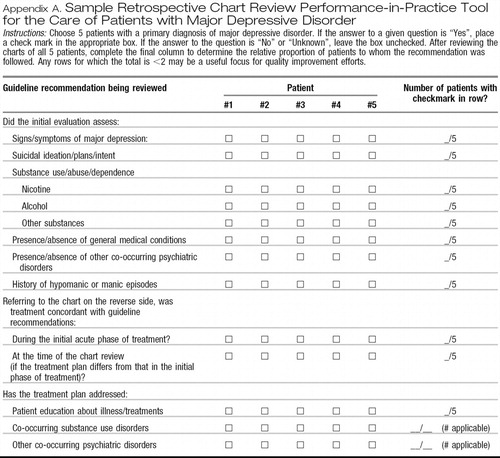

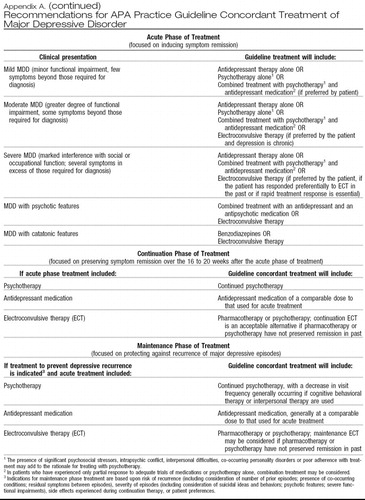

Appendix A

Sample Retrospective Chart Review Performance-in-Practice Tool for the Care of Patients with Major Depressive Disorder

|

|

Instructions: Choose 5 patients with a primary diagnosis of major depressive disorder. If the answer to a given question is “Yes”, place a check mark in the appropriate box. If the answer to the question is “No” or “Unknown”, leave the box unchecked. After reviewing the charts of all 5 patients, complete the final column to determine the relative proportion of patients to whom the recommendation was followed. Any rows for which the total is <2 may be a useful focus for quality improvement efforts.

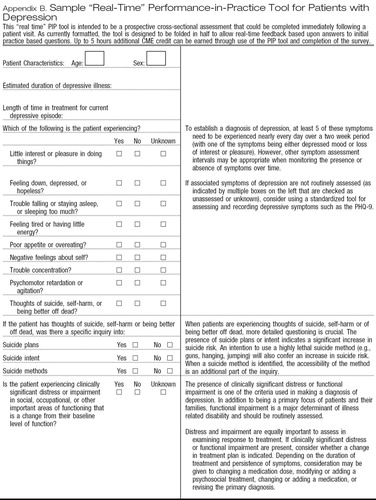

Appendix B

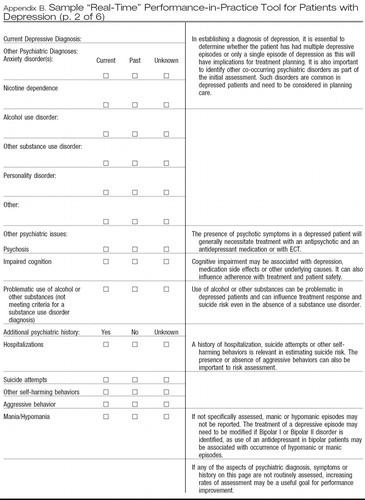

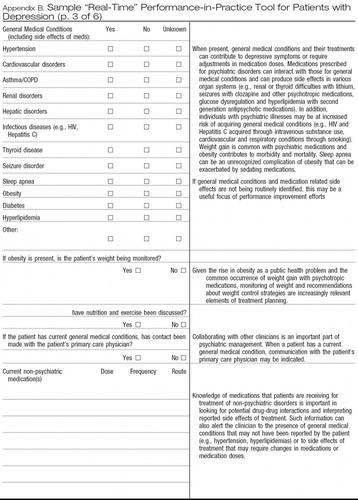

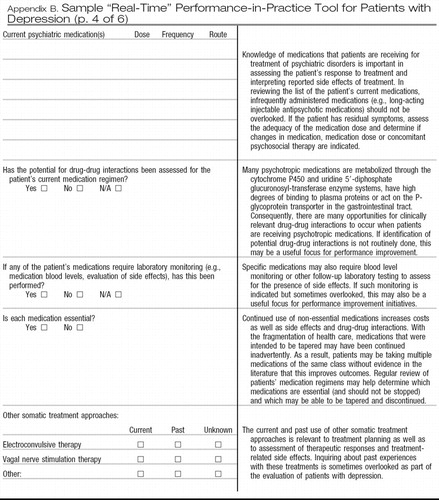

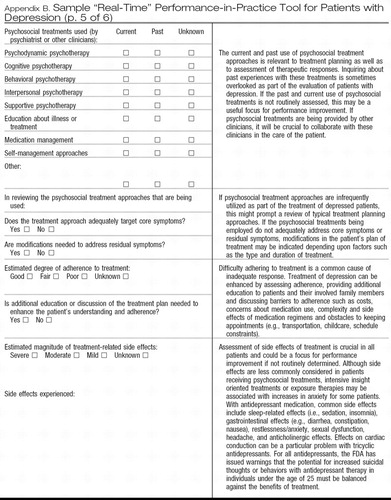

Sample “Real-Time” Performance-in-Practice Tool for Patients with Depression

|

|

|

|

|

|

This “real time” PIP tool is intended to be a prospective cross-sectional assessment that could be completed immediately following a patient visit. As currently formatted, the tool is designed to be folded in half to allow real-time feedback based upon answers to initial practice based questions. Up to 5 hours additional CME credit can be earned through use of the PIP tool and completion of the survey.

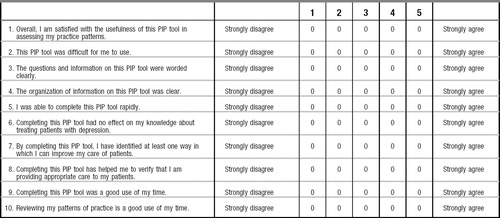

Sample “Real-Time” Performance in Practice Tool for Patients with Depression Survey Form and CME Certification Begin date February 2008, End date February 2010.

To earn CME credit for this Survey Program, psychiatrists should use the Sample Real Time Performance in Practice Tool as indicated. After using the performance in practice tool, participants should fully complete this survey and send it by mail to APACME 1000 Wilson Boulevard, Suite 1825 Rosslyn VA 22209, or fax to 703 907 7849, or send by email to [email protected].

Objective: After completion of this activity psychiatrists will have the foundation for subsequent performance improvement initiatives aimed at enhancing outcomes for patients with major depressive disorder.

The APA is accredited by the Accreditation Council for Continuing Medical Education (ACCME) to provide continuing medical education for physicians. APA designates this educational activity for a maximum of 5 AMA PRA Category 1 credits. Physicians should only claim credit commensurate with the extent of their participation in the activity.

List the most helpful aspects of this PIP tool:

1.

2.

3.

List the least helpful aspects of this PIP tool:

1.

2.

3.

How do you plan to use the information gained from this self-assessment in your practice?

How might we improve upon this PIP tool in the future?

Additional comments:

Please evaluate the effectiveness of this CME activity by answering the following questions.

1. Achievement of educational objectives: YES____ NO____

2. Material was presented without bias: YES____ NO____

American Psychiatric Association CME 1000 Wilson Blvd., Suite 1825Arlington, VA 22209-3901Telephone: (703) 907-8637, Fax: (703) 907-7849 To earn credit, complete and send this page. Retain a copy of this form for your records.

Number of hours you spent on this activity ______________ (understanding & using the tool and completing the survey up to 5 hours)

Date____________________________

APA Member: Yes_____ No_____

Member number_________________

__________________________________________

Last name First name Middle initial Degree

__________________________________________

Mailing address

________________________

City State Zip code Country

________

Fax number

E-mail address:______________________________

I would like to receive my certificate by:

Fax______ E-mail______.

1 Institute of Medicine. Crossing the quality chasm: a new health system for the 21st century. Washington, D.C: National Academy Press; 2001.Google Scholar

2 Institute of Medicine. Improving the quality of health care for mental and substance-use conditions. Washington, DC: National Academies Press; 2006.Google Scholar

3 Colenda CC, Wagenaar DB, Mickus M, Marcus SC, Tanielian T, Pincus HA. Comparing clinical practice with guideline recommendations for the treatment of depression in geriatric patients: findings from the APA practice research network. Am J Geriatr Psychiatry 2003 7; 11( 4): 448– 57.Crossref, Google Scholar

4 West JC, Duffy FF, Wilk JE, Rae DS, Narrow WE, Pincus HA, et al. Patterns and quality of treatment for patients with major depressive disorder in routine psychiatric practice. Focus 2005; 3( 1): 43– 50.Link, Google Scholar

5 Wilk JE, West JC, Narrow WE, Marcus S, Rubio-Stipec M, Rae DS, et al. Comorbidity patterns in routine psychiatric practice: is there evidence of underdetection and underdiagnosis? Compr Psychiatry 2006 7; 47( 4): 258– 64.Crossref, Google Scholar

6 Pincus HA, Page AE, Druss B, Appelbaum PS, Gottlieb G, England MJ. Can psychiatry cross the quality chasm? Improving the quality of health care for mental and substance use conditions. Am J Psychiatry 2007 5; 164( 5): 712– 9.Crossref, Google Scholar

7 Rost K, Dickinson LM, Fortney J, Westfall J, Hermann RC. Clinical improvement associated with conformance to HEDIS-based depression care. Ment Health Serv Res 2005 6; 7( 2): 103– 12.Crossref, Google Scholar

8 Cochrane LJ, Olson CA, Murray S, Dupuis M, Tooman T, Hayes S. Gaps between knowing and doing: understanding and assessing the barriers to optimal health care. J Contin Educ Health Prof 2007; 27( 2): 94– 102.Crossref, Google Scholar

9 Chen RS, Rosenheck R. Using a computerized patient database to evaluate guideline adherence and measure patterns of care for major depression. J Behav Health Serv Res 2001 11; 28( 4): 466– 74.Crossref, Google Scholar

10 Cabana MD, Rushton JL, Rush AJ. Implementing practice guidelines for depression: applying a new framework to an old problem. Gen Hosp Psychiatry 2002 1; 24( 1): 35– 42.Crossref, Google Scholar

11 Davis D. Does CME work? An analysis of the effect of educational activities on physician performance or health care outcomes. Int J Psychiatry Med 1998; 28( 1): 21– 39.Google Scholar

12 Sohn W, Ismail AI, Tellez M. Efficacy of educational interventions targeting primary care providers' practice behaviors: an overview of published systematic reviews. J Public Health Dent 2004; 64( 3): 164– 72.Crossref, Google Scholar

13 Bloom BS. Effects of continuing medical education on improving physician clinical care and patient health: a review of systematic reviews. Int J Technol Assess Health Care 2005; 21( 3): 380– 5.Crossref, Google Scholar

14 Chaillet N, Dube E, Dugas M, Audibert F, Tourigny C, Fraser WD, et al. Evidence-based strategies for implementing guidelines in obstetrics: a systematic review. Obstet Gynecol 2006 11; 108( 5): 1234– 45.Crossref, Google Scholar

15 Grimshaw J, Eccles M, Thomas R, MacLennan G, Ramsay C, Fraser C, et al. Toward evidence-based quality improvement. Evidence (and its limitations) of the effectiveness of guideline dissemination and implementation strategies 1966–1998. J Gen Intern Med 2006 2; 21 Suppl 2: S14– S20.Google Scholar

16 Grimshaw JM, Shirran L, Thomas R, Mowatt G, Fraser C, Bero L, et al. Changing provider behavior: an overview of systematic reviews of interventions. Med Care 2001 8; 39( 8 Suppl 2): II2– 45.Google Scholar

17 Grol R. Changing physicians' competence and performance: finding the balance between the individual and the organization. J Contin Educ Health Prof 2002; 22( 4): 244– 51.Crossref, Google Scholar

18 Oxman TE. Effective educational techniques for primary care providers: application to the management of psychiatric disorders. Int J Psychiatry Med 1998; 28( 1): 3– 9.Crossref, Google Scholar

19 Green LA, Wyszewianski L, Lowery JC, Kowalski CP, Krein SL. An observational study of the effectiveness of practice guideline implementation strategies examined according to physicians' cognitive styles. Implement Sci 2007 12 1; 2( 1):41.Google Scholar

20 Balas EA, Weingarten S, Garb CT, Blumenthal D, Boren SA, Brown GD. Improving preventive care by prompting physicians. Arch Intern Med 2000 2 14; 160( 3): 301– 8.Crossref, Google Scholar

21 Feldstein AC, Smith DH, Perrin N, Yang X, Rix M, Raebel MA, et al. Improved therapeutic monitoring with several interventions: a randomized trial. Arch Intern Med 2006 9 25; 166( 17): 1848– 54.Crossref, Google Scholar

22 Kucher N, Koo S, Quiroz R, Cooper JM, Paterno MD, Soukonnikov B, et al. Electronic alerts to prevent venous thromboembolism among hospitalized patients. N Engl J Med 2005 3 10; 352( 10): 969– 77.Crossref, Google Scholar

23 Weingarten SR, Henning JM, Badamgarav E, Knight K, Hasselblad V, Gano A, Jr., et al. Interventions used in disease management programmes for patients with chronic illness-which ones work? Meta-analysis of published reports. BMJ 2002 10 26; 325( 7370): 925.Crossref, Google Scholar

24 O'Connor PJ, Crain AL, Rush WA, Sperl-Hillen JM, Gutenkauf JJ, Duncan JE. Impact of an electronic medical record on diabetes quality of care. Ann Fam Med 2005 7; 3( 4): 300– 6.Crossref, Google Scholar

25 Rollman BL, Hanusa BH, Lowe HJ, Gilbert T, Kapoor WN, Schulberg HC. A randomized trial using computerized decision support to improve treatment of major depression in primary care. J Gen Intern Med 2002 7; 17( 7): 493– 503.Crossref, Google Scholar

26 Sequist TD, Gandhi TK, Karson AS, Fiskio JM, Bugbee D, Sperling M, et al. A randomized trial of electronic clinical reminders to improve quality of care for diabetes and coronary artery disease. J Am Med Inform Assoc 2005 7; 12( 4): 431– 7.Crossref, Google Scholar

27 Tierney WM, Overhage JM, Murray MD, Harris LE, Zhou XH, Eckert GJ, et al. Can computer-generated evidence-based care suggestions enhance evidence-based management of asthma and chronic obstructive pulmonary disease? A randomized, controlled trial. Health Serv Res 2005 4; 40( 2): 477– 97.Crossref, Google Scholar

28 Arnold SR, Straus SE. Interventions to improve antibiotic prescribing practices in ambulatory care. Cochrane Database Syst Rev 2005; ( 4): CD003539.Google Scholar

29 Bradley EH, Holmboe ES, Mattera JA, Roumanis SA, Radford MJ, Krumholz HM. Data feedback efforts in quality improvement: lessons learned from US hospitals. Qual Saf Health Care 2004 2; 13( 1): 26– 31.Crossref, Google Scholar

30 Paukert JL, Chumley-Jones HS, Littlefield JH. Do peer chart audits improve residents' performance in providing preventive care? Acad Med 2003 10; 78( 10 Suppl): S39– S41.Crossref, Google Scholar

31 Roumie CL, Elasy TA, Greevy R, Griffin MR, Liu X, Stone WJ, et al. Improving blood pressure control through provider education, provider alerts, and patient education: a cluster randomized trial. Ann Intern Med 2006 8 1; 145( 3): 165– 75.Crossref, Google Scholar

32 Hysong SJ, Best RG, Pugh JA. Clinical practice guideline implementation strategy patterns in Veterans Affairs primary care clinics. Health Serv Res 2007 2; 42( 1 Pt 1): 84– 103.Google Scholar

33 Dykes PC, Acevedo K, Boldrighini J, Boucher C, Frumento K, Gray P, et al. Clinical practice guideline adherence before and after implementation of the HEARTFELT (HEART Failure Effectiveness & Leadership Team) intervention. J Cardiovasc Nurs 2005 9; 20( 5): 306– 14.Crossref, Google Scholar

34 Greene RA, Beckman H, Chamberlain J, Partridge G, Miller M, Burden D, et al. Increasing adherence to a community-based guideline for acute sinusitis through education, physician profiling, and financial incentives. Am J Manag Care 2004 10; 10( 10): 670– 8.Google Scholar

35 American Board of Psychiatry and Neurology. Maintenance of Certification for Psychiatry. 2007. Accessed on 11-23-0007. Available from: URL:http://www.abpn.com/moc_psychiatry.htmGoogle Scholar

36 Institute of Medicine. Health professions education: A bridge to quality. Washington, D.C: National Academies Press; 2003.Google Scholar

37 Miller SH. American Board of Medical Specialties and repositioning for excellence in lifelong learning: maintenance of certification. J Contin Educ Health Prof 2005; 25( 3): 151– 6.Crossref, Google Scholar

38 American Psychiatric Association. Practice guideline for the treatment of patients with major depressive disorder (revision). Am J Psychiatry 2000 4; 157( 4 Suppl): 1– 45.Crossref, Google Scholar

39 Hermann RC. Improving mental healthcare: A guide to measurement-based quality improvement. Washington, DC: American Psychiatric Pub; 2005.Google Scholar

40 National Committee for Quality Assurance. HEDIS and quality measurement. National Committee for Quality Assurance 2007. Accessed on 12-30-2007. Available from: URL: http://web.ncqa.org/tabid/59/Default.aspxGoogle Scholar

41 Duffy FF, Narrow W, West JC, Fochtmann LJ, Kahn DA, Suppes T, et al. Quality of care measures for the treatment of bipolar disorder. Psychiatr Q 2005; 76( 3): 213– 30.Crossref, Google Scholar

42 American Psychiatric Association. Practice guideline for the treatment of patients with bipolar disorder (revision). Am J Psychiatry 2002 4; 159( 4 Suppl): 1– 50.Crossref, Google Scholar

43 American Psychiatric Association. American Psychiatric Association practice guidelines for the treatment of psychiatric disorders. Arlington, Va: American Psychiatric Association; 2006.Google Scholar

44 Sachs GS, Printz DJ, Kahn DA, Carpenter D, Docherty JP. The Expert Consensus Guideline Series: Medication Treatment of Bipolar Disorder 2000. Postgrad Med 2000 4; Spec No: 1– 104.Google Scholar

45 Zarin DA, Young JL, West JC. Challenges to evidence-based medicine: a comparison of patients and treatments in randomized controlled trials with patients and treatments in a practice research network. Soc Psychiatry Psychiatr Epidemiol 2005 1; 40( 1): 27– 35.Crossref, Google Scholar

46 Eddy D. Reflections on science, judgment, and value in evidence-based decision making: a conversation with David Eddy by Sean R. Tunis. Health Aff (Millwood) 2007 7; 26( 4):w500–w515.Google Scholar

47 Kobak KA, Taylor L, Katzelnick DJ, Olson N, Clagnaz P, Henk HJ. Antidepressant medication management and Health Plan Employer Data Information Set (HEDIS) criteria: reasons for nonadherence. J Clin Psychiatry 2002 8; 63( 8): 727– 32.Crossref, Google Scholar

48 Hofer TP, Hayward RA, Greenfield S, Wagner EH, Kaplan SH, Manning WG. The unreliability of individual physician “report cards” for assessing the costs and quality of care of a chronic disease. JAMA 1999 6 9; 281( 22): 2098– 105.Crossref, Google Scholar

49 Greenfield S, Kaplan SH, Kahn R, Ninomiya J, Griffith JL. Profiling care provided by different groups of physicians: effects of patient case-mix (bias) and physician-level clustering on quality assessment results. Ann Intern Med 2002 1 15; 136( 2): 111– 21.Crossref, Google Scholar

50 Parkerton PH, Smith DG, Belin TR, Feldbau GA. Physician performance assessment: nonequivalence of primary care measures. Med Care 2003 9; 41( 9): 1034– 47.Crossref, Google Scholar

51 Lipner RS, Weng W, Arnold GK, Duffy FD, Lynn LA, Holmboe ES. A three-part model for measuring diabetes care in physician practice. Acad Med 2007 10; 82( 10 Suppl): S48– S52.Crossref, Google Scholar

52 Nietert PJ, Wessell AM, Jenkins RG, Feifer C, Nemeth LS, Ornstein SM. Using a summary measure for multiple quality indicators in primary care: the Summary QUality InDex (SQUID). Implement Sci 2007; 2: 1 1Crossref, Google Scholar

53 Rollman BL, Gilbert T, Lowe HJ, Kapoor WN, Schulberg HC. The electronic medical record: its role in disseminating depression guidelines in primary care practice. Int J Psychiatry Med 1999; 29( 3): 267– 86.Crossref, Google Scholar

54 Trivedi MH, Daly EJ. Measurement-based care for refractory depression: a clinical decision support model for clinical research and practice. Drug Alcohol Depend 2007 5;, 88 Suppl 2: S61– S71.Google Scholar

55 Hepner KA, Rowe M, Rost K, Hickey SC, Sherbourne CD, Ford DE, et al. The effect of adherence to practice guidelines on depression outcomes. Ann Intern Med 2007 9 4; 147( 5): 320– 9.Crossref, Google Scholar

56 Dennehy EB, Suppes T, Rush AJ, Miller AL, Trivedi MH, Crismon ML, et al. Does provider adherence to a treatment guideline change clinical outcomes for patients with bipolar disorder? Results from the Texas Medication Algorithm Project. Psychol Med 2005 12; 35( 12): 1695– 706.Crossref, Google Scholar

57 Kroenke K, Spitzer RL, Williams JB. The PHQ-9: validity of a brief depression severity measure. J Gen Intern Med 2001 9; 16( 9): 606– 13.Crossref, Google Scholar

58 Lowe B, Kroenke K, Herzog W, Grafe K. Measuring depression outcome with a brief self-report instrument: sensitivity to change of the Patient Health Questionnaire (PHQ-9). J Affect Disord 2004 7; 81( 1): 61– 6.Crossref, Google Scholar

59 Lowe B, Schenkel I, Carney-Doebbeling C, Gobel C. Responsiveness of the PHQ-9 to Psychopharmacological Depression Treatment. Psychosomatics 2006 1; 47( 1): 62– 7.Crossref, Google Scholar

60 MacDonald G, Starr G, Schooley M, Yee SL, Klimowski K, Turner K. Introduction to program evaluation for comprehensive tobacco control programs. Centers for Disease Control and Prevention 2001 Accessed on 12-17-2007. Available from: URL: http://www.cdc.gov/tobacco/tobacco_control_programs/surveillance_evaluation/evaluation_manual/00_pdfs/Evaluation.pdfGoogle Scholar

61 American Psychiatric Association. Practice guideline for the treatment of patients with substance use disorders, second edition. Am J Psychiatry 2007 4; 164( 4 Suppl): 5– 123.Google Scholar

62 American Psychiatric Association. Practice guideline for the assessment and treatment of patients with suicidal behaviors. Am J Psychiatry 2003 11; 160( 11 Suppl): 1– 60.Crossref, Google Scholar

63 American Medical Association Physician Consortium for Performance Improvement. AMA (CQI) Major depressive disorder. American Medical Association Physician Consortium for Performance Improvement Accessed on 12-17-2007. Available from: URL: http://www.ama-assn.org/ama/pub/category/16494.htmlGoogle Scholar